Content navigation:

- Adopting AI: trust is the issue

- XAI or how to make AI great again

- AI in pricing: embrace the inevitable

- Competera interpretability features

- Conclusion

Adopting AI: trust is the issue

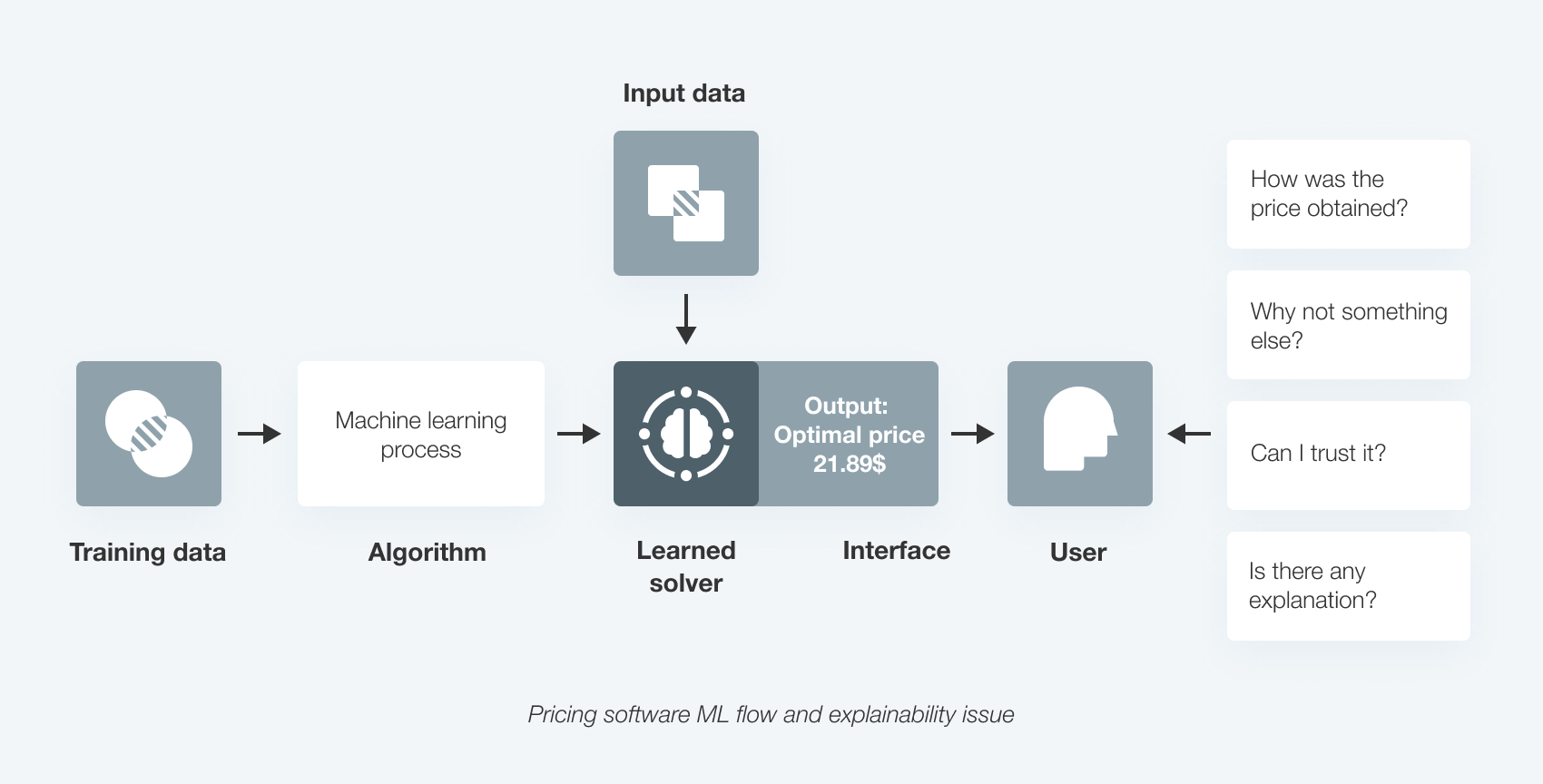

There is every reason to believe that we live in the era of AI as it demonstrates its efficacy across various domains from helping in surgical treatment, powering self-driving cars, and generating optimal prices in retail. But there is one thing that is still used by AI skeptics to deny that the AI era has started. And that is the trust of the end-users. Definitely, this argument is not without reason. The mistrust in AI technology and its solutions will undermine the mass adoption of AI until the end-users can have a full understanding of all processes behind the advanced algorithms.

Sometimes, the pace at which technology is developed goes far beyond the human ability to adopt it and get used. And that's exactly the case with AI-powered technologies. The recent research by a group of computer scientists from Fayetteville State University in North Carolina, US shows that trust remains the major issue with AI because people are the end-users, and they cannot have full trust in technology until they do not know how AI processes information. In this regard, Sambit Bhattacharya from Fayetteville University notes that overcoming the 'lack of transparency' in the way AI processes information — popularly called the "black box problem" — is crucial to develop trust in advanced technologies.

XAI or how to make AI great again

In the first two decades of the twenty-first century, several landmark projects based on the innovative use of AI were introduced. But most of them failed to become real game-changers within their industries. IBM Watson, and particularly, Watson for Oncology may serve as a perfect illustration in this regard. Watson started as an unprecedented AI-based project that was supposed to revolutionize various industries and healthcare, in particular. According to Vyacheslav Polonski, Ph.D., UX researcher for Google and founder of Avantgarde Analytics, IBM’s attempt to promote Watson was a PR disaster. The end-users didn't trust IBM's supercomputer and denied every suggestion which appeared to be suspicious or ambiguous.

IBM Watson and other similar cases convinced AI promoters that it is not enough to design a superior algorithm but it should also be explainable for the end-users. Explainability has shortly become a critical requirement for AI across all industries and contexts. In this regard, Jack Dorsey from Twitter noted “We need to do a much better job at explaining how our algorithms work. Ideally opening them up so that people can actually see how they work. This is not easy for anyone to do”. In recent years, more and more businesses started to invest in explainable AI or simply XAI. And pricing software providers are not an exception.

AI in pricing: embrace the inevitable

Before we dive into the details of how we at Competera work with explainable AI, let's briefly recap the most significant implications of AI use in pricing. Advanced AI-driven algorithms along with the latest machine learning (ML) networks have become a core technology behind advanced price optimization software. There are numerous ways AI and ML help retailers set optimal prices. Just a few examples illustrating the trend.

A good case to show how AI-based algorithms can contribute to sustainable pricing is an ability to shift from SKU-centered to portfolio-based pricing. Retailers relying on manual approaches to price management are either consciously or unconsciously using SKU-centred pricing which creates cannibalization effects damaging price perception and leads to strategic cash leakages in the long run.

In contrast, Competera's portfolio pricing relies on a demand-based engine powered with neural networks measuring products' own price elasticity and cross-elasticities to ensure that goals on both the product and the category level are achieved. The accuracy of every recommendation is gained through context-dependent price elasticities and a high-performance solver capable of shoveling through billions of possible price combinations to find the right one.

Another way in which AI already helps retailers is building predictive models. Put it simply, ML-based algorithms can accurately predict how customers will react to certain price changes and forecast demand for a given product. The AI-powered pricing engine then considers these predictions and comes up with the optimal price recommendations considering particular business goals set by a retailer (increasing sales volume, gaining extra margin, etc.). This is how AI enables retailers to safely test various promo and pricing strategies and see which business impact every decision would have.

As you can see, AI and ML open up enormous opportunities for retailers. But once again, the problem is the lack of trust which undermines the mass adoption of solutions, like Competera. And this is why every provider is eager to overcome the 'black box' challenge and make users trust the algorithms. At Competera, we've managed to gain remarkable results on the way to explainable AI. Let's see how.

Competera interpretability features

Frankly, the story of IBM Watson and other pioneering AI-driven projects is familiar to us at Competera. We've all been there: end-users get an advanced AI solution, but the recommendations generated by the algorithm are questioned as long as there is a 'black box' and lack of transparency. Eventually, those AI-powered suggestions that appear to be contradictory to human logic are neglected. Such kind of selective use undermines the overall effectiveness of the solution which makes the end-users even more skeptical towards AI.

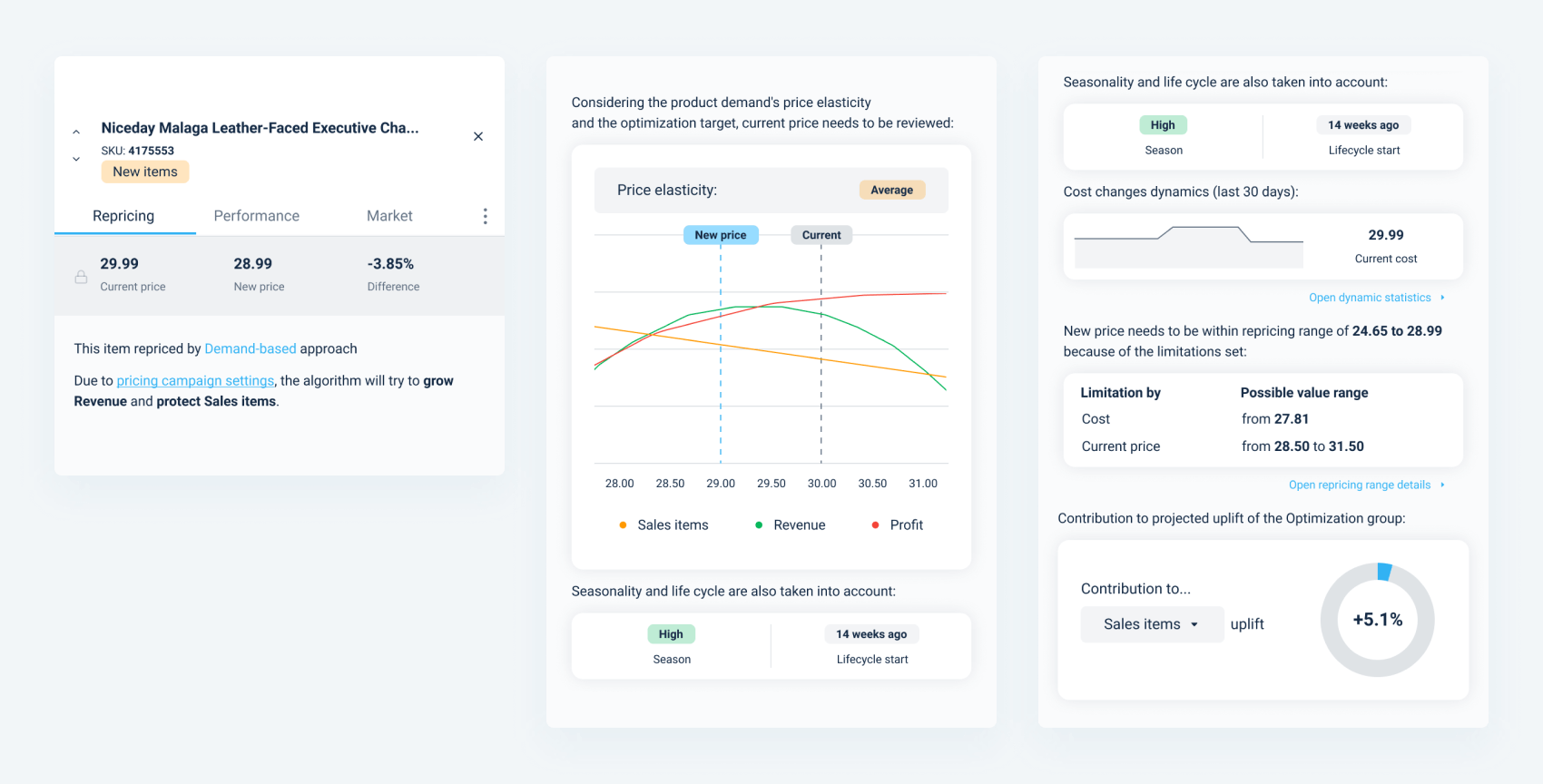

So, how to overcome this endless circle of mistrust? At Competera, we did it by adding a set of interpretability features enabling the users to figure out the reasoning behind a recommendation. A click on an SKU / PL id code opens up a panel containing detailed information on the main factors that were taken into account by algorithms while crafting a price recommendation.

The new interpretability feature enables users to:

-

get useful insights on what was behind the decisions of the Price Optimization engine;

-

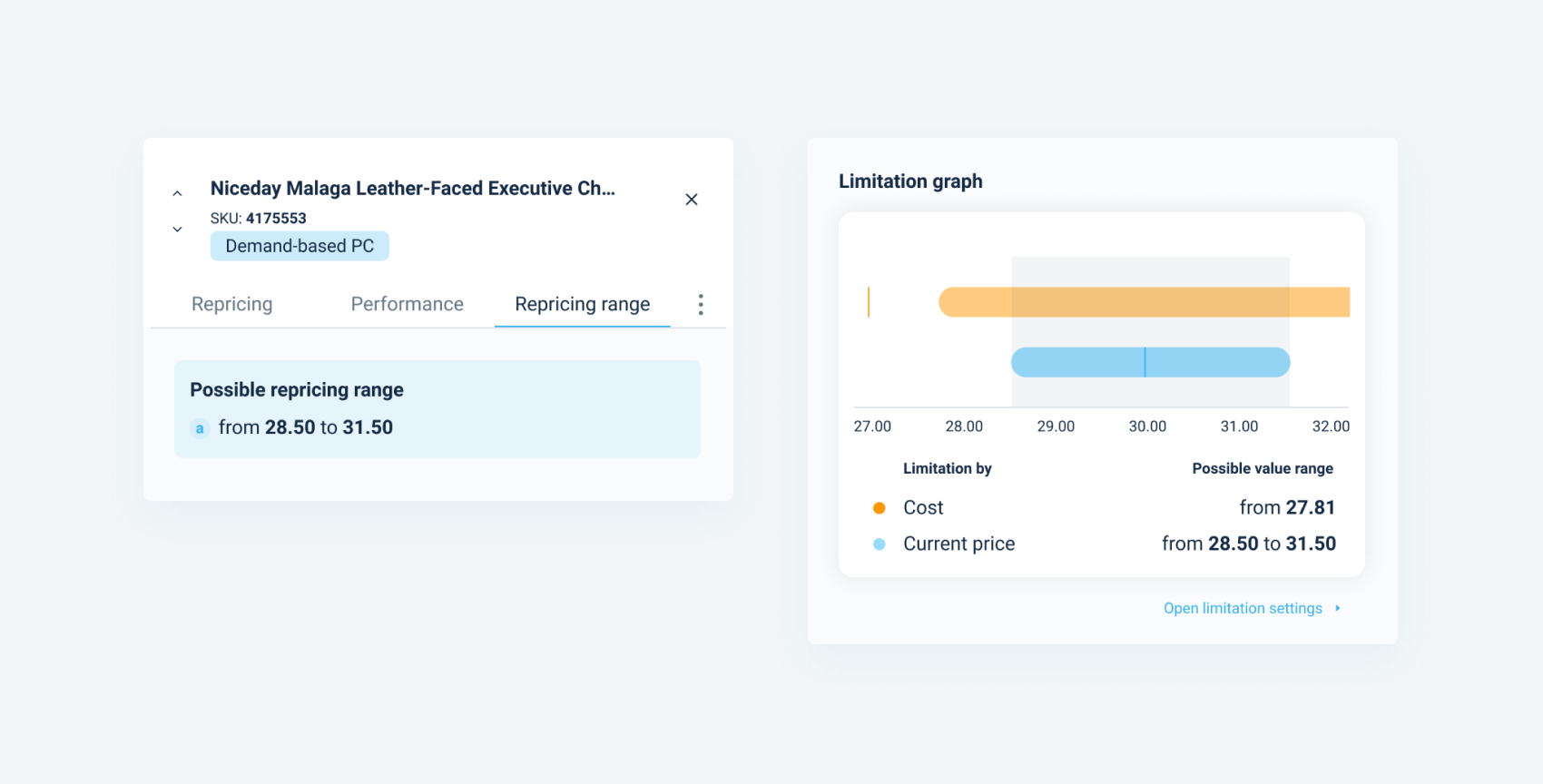

check out how the set limitations have impacted the search range;

-

find out what the demand elasticity curves look like;

-

understand how the new price point impacts own product sales and what halo effect it has on other products in the category.

As the core idea of interpretability features was to make every AI-powered recommendation as clear and explainable as possible, we've unveiled the range in which the price could have changed. Now, a user can not only check the range limits but also gets a clear explanation of how both upper and lower limits were determined. The new price search range can be impacted by a number of either econometric factors or business constraints (e.g. acceptable minimum markup, maximum possible % of price change vs the previous period, seasonality, price index, specific product parameters, competitive data, acceptable % of a price change, cost, price thresholds, business metric to grow or to protect, etc.).

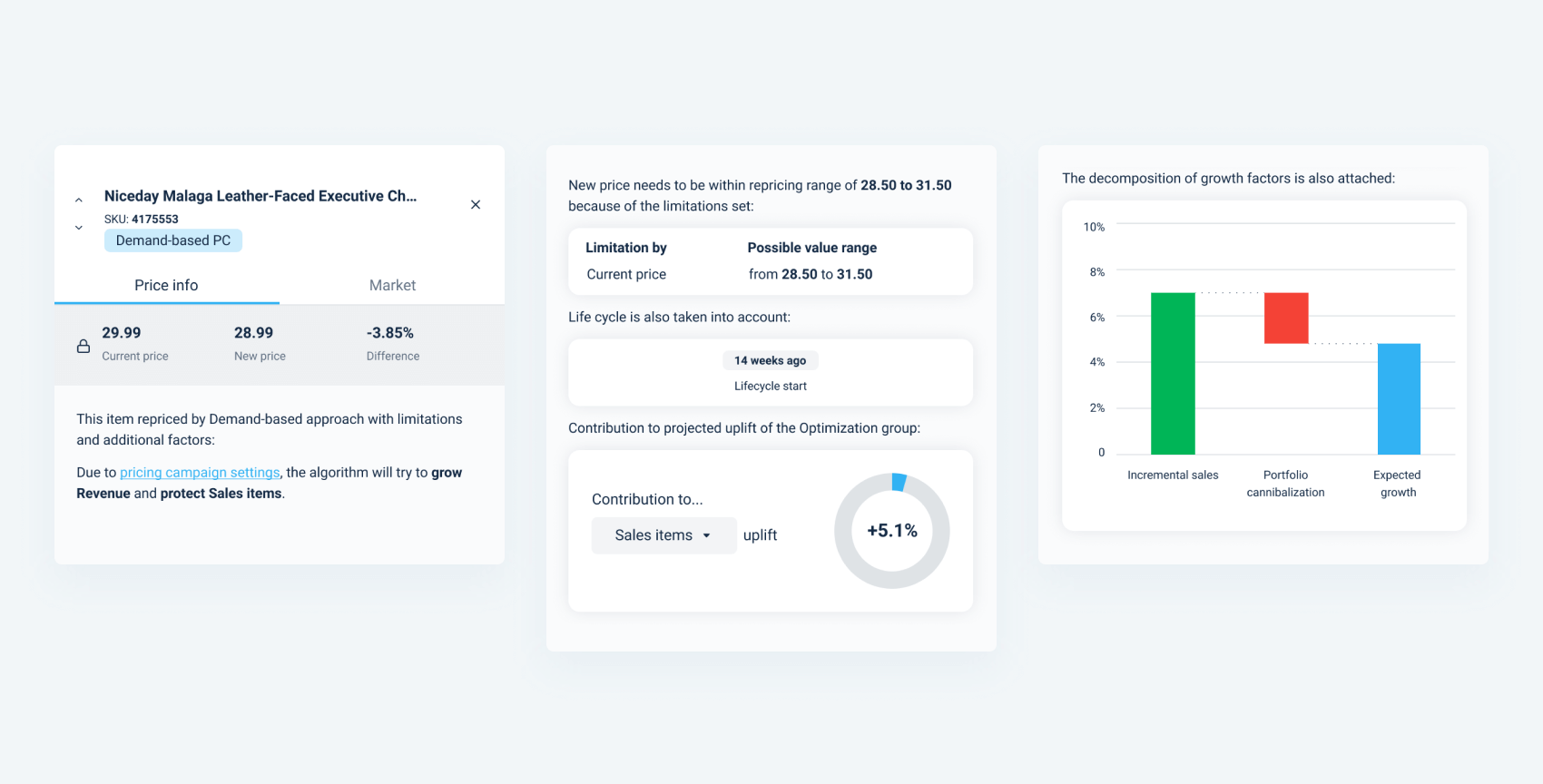

Another important element that is now explainable is a context-dependent elasticity of demand processed by the algorithm. What it means is that users can get an explicit visualization showing how demand would react to any price change within the limits. It is important to remember that an optimal price always depends on the goals set by the retailer.

That's why in the interpretability panel both primary and secondary goals are indicated. And the visualization of price changes helps to make sure that the adjustment is relevant to particular goals set by a retailer (revenue growth, sales volume increase, etc.). Preset cross-dependencies between the products in an optimization group are also revealed in the panel (e.g., a price for product A should always remain 10+% lower than the price for product B).

The examples and list of factors outlined above show how complex the pricing architecture might be. And that's why, in some cases, different settings might contradict each other. If such a conflict occurs, the platform will provide a notification supplemented with a clear explanation of which particular parameters are contradictory.

As we've mentioned above, portfolio-based pricing is one of the most important implications of AI use in pricing. It means that an algorithm considers both explicit and implicit cross-dependencies within the product group while crafting optimal prices per every single SKU.

So, every recommendation can be evaluated through two different angles — the impact of an SKU's new price on other products' metrics and the impact of other products' new prices on a particular SKU's performance. Both types of dependencies are also visualized in the interpretability panel. It means that the user may, for example, choose a particular SKU and find out how its new price contributes to the sales volume of an entire optimization group through both own price and cross-elasticities with other products.

Eventually, these features make the processes behind every AI-driven decision transparent, moving away the doubts of an end-user. Besides, interpretability features provide users with plenty of important insights that can help to evaluate the relevance of particular parameters of a pricing campaign and reconsider the overall effectiveness of a pricing strategy. In other words, explainable recommendations help retailers to manage their pricing transparently and proactively, and to focus on pricing strategy, not execution.

Conclusion

It is not enough for tech companies to design an advanced AI-driven solution. The latter would never be used at a full capacity as long as it is not trusted by the end-users. And that is why the future belongs to explainable AI.

Advanced pricing solutions may serve as a perfect illustration showing how transparency and interpretability become the core factors contributing to the comprehensive and mass adoption of pricing software. In this regard, Competera’s interpretability features represent the perfect showcase revealing the power of explainable AI in pricing.

FAQ

The 'black box' issue or mistrust in technology undermines the mass adoption of AI. There are good reasons to say that AI won't be fully adopted until the end-users can have a full understanding of all processes behind the advanced algorithms.

Explainable AI (XAI) is an approach to AI technology development with a great emphasis on explainability, interpretability, and transparency of the decisions made by AI. Recently, XAI has shortly become a critical requirement for AI across all industries and contexts.

There are different approaches to Explainable AI implementation in pricing solutions. One of the most popular and effective ones implies providing users with complete visibility of all factors and determinants behind a pricing recommendation.